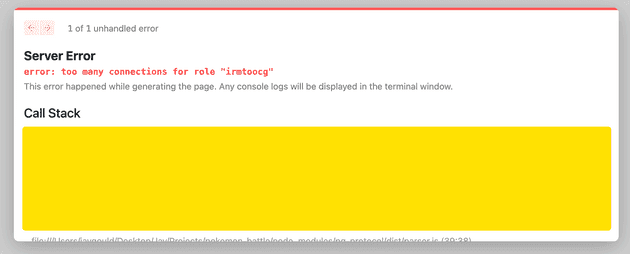

The 'too many connections for role' error with Postgres on serverless

April 06, 2022

During the development of a side project last month I was trying to build a full web app for free for demo pruposes, so decided to use Vercel hosting with an ElephantSQL Postgres database. When building production servers with Next.js I usually go for a custom Node.js server setup, but the aim of the project was to build as quick and easy as possible, so opted for Vercel’s serverless functions instead. This ultimately lead to a 3 hour frustrated evening trying to get around a connection pooling problem from node-postgres which was error: too many connections for role "xxxxx":

The problem - “too many connections for role” on Postgres

The issue was clear - ElephantSQL has a 5 connection limit on their free plan, and I was exhausting them very quickly in my application because of the nature of serverless functions, which is that the server can’t share a connection to the database like a traditional web application.

Let’s take a Node.js Express server for example. In a traditional server architecture like this, a server may be started by running an index.js file, and within that file may be an include for Express to run the actual server:

const express = require("express")

const app = express()

const port = 3000

app.get("/", (req, res) => {

res.send("Hello World!")

})

app.listen(port, () => {

console.log(`Example app listening on port ${port}`)

})Within this server may be a setup for a database connection, with a query happening on each page load:

const express = require("express")

const app = express()

const port = 3000

const { Sequelize } = require("sequelize")

const sequelize = new Sequelize(

"postgres://username:[email protected]:5432/databasename"

)

app.get("/", (req, res) => {

const pageData = await sequelize.query('SELECT * FROM table')

res.send({ pageData })

})

app.listen(port, () => {

console.log(`Example app listening on port ${port}`)

})As the server is constantly running in this situation, all requests to the homepage are hitting a server with a single database connection. This means with a load of requests and a single database connection (no pooling involved), a database could handle a fair amount of queries at one time as it will just run them in series.

Actually, in this situation above, Sequelize is installed which handles automatic connection pooling, so it would spread the queries over a default of 5 connection pools, but ignore that for now.

In a serverless environment however, there’s no “state” or persistence between different instances of the server. That is to say two separate requests at the same time to the same server will be running on a different instance, hence there’s no way to share a database connection. This leads to the “too many connections for role” error as each request to my application would open a new connection to ElephantSQL, which has a hard limit of 5 concurrent connections.

The solution - closing connections properly with Node Postgres

After a good while of following the docs for connections on node-postgres, I was still having the same problem. The docs explain that “the easiest and by far most common way to use node-postgres is through a connection pool”, which is what I was setting up. The following is what the docs say about setting up the node-postgres package:

// adapted from node-postgres.com docs

const { Pool } = require("pg")

const pool = new Pool()

async function myDatabaseQuery() {

const client = await pool.connect()

try {

const res = await client.query("SELECT * FROM users WHERE id = $1", [1])

console.log(res.rows[0])

} finally {

// Make sure to release the client before any error handling,

// just in case the error handling itself throws an error.

client.release()

}

}This works ok during development with a free tier, 5 connection limit database, but in production when there was more than a 3 or 4 users using the app at one time, I would get the “too many connections” error.

The error is because the client.release() action returns the client to the pool, but the pool itself is still open. Due to the way the serverless functions work, each time a user was accessing the app, a new connection pool would be created - each one on a new connection. When the query had finished, the client is returned back to the pool fine, but the pool is still open.

One solution in this situation is to ensure that the pool is shut down, hence closing one of the connections to the database. This is done with the client.end() function. Here’s how the above code snippet would look with this update:

// adapted from node-postgres.com docs

const { Pool } = require("pg")

const pool = new Pool()

async function myDatabaseQuery() {

const client = await pool.connect()

try {

const res = await client.query("SELECT * FROM users WHERE id = $1", [1])

console.log(res.rows[0])

} finally {

await client.release()

await client.end()

}

}Now this solution is ok for a small app with not a huge amount of traffic, but as there’s limit of 5 open connections for my free database plan, database queries are likely to fail if there are a number of people accessing the app at one time.

One reason is that we are not making use of one of the huge benefits of Postgres which is the connection pooling. Instead, we’re opening a direct database connection, and closing it straight after the query is finished. This is not the most efficient way of doing things - enter connection pooling.

Connection pooling with Vercel

In the old days of databases on the web, in order for an application to run a query on a database it would first need to connect to the database, exchanging security information to authenticate the request, perform the query, then close the connection. Although this process could take mere milliseconds, those milliseconds add up when hundreds or thousands of queries are being executed at once. That’s why connection pooling is useful, and why I wanted to implement that database design on my Vercel app.

I tried to get connection pooling set up with Vercel by following a number of articles, incluidng this one which I felt was the closest solution I’d reached, but still had no joy getting it fully functional. I think the problem was because the connection simply was not being shared across multiple instances like the article suggests will happen.

I’ll be back with an update on setting up proper connection pooling with Vercel soon, but in the meantime the above solution will do fine for most small - medium sized projects.

Senior Engineer at Haven