An intro to Docker and managing your own containers - Part 1

April 26, 2020

This is part 1 of a 2 part post about Docker for web development.

- Part 1: An intro to Docker and managing your own containers - [you are here]

- Part 2: Using Docker as a development environment

Intro

After using Docker as a development environment for a while with a few small projects, I’ve decided to update my public projects to run inside containers for users who want to get up and running quickly. Although the Docker docs are good, they didn’t contain everything I wanted to know right there when I needed to know it, especially for Node projects. That’s why this series of posts is dedicated to get you up and running with Docker, focusing on the basics as well as some helpful side points which paint a better picture of the role Docker can play in running an app, as well as using Docker as a tool for developing and deploying your application.

Specifically, this post covers:

- What is Docker?

- A quick Hello World intro to Docker

- Running a simple Docker instance from Docker Hub

- Create a Docker image of your own application so it can be shared and ran in any environment

- Updating your own images and the difference between Dockerfiles and Docker commits for version management

What is Docker?

If you’ve already been using Docker and have seen the thousands of posts out there which explain what Docker is, you may want to skip this part. But for those who are interested - Docker is a tool which makes it easier to run applications by running them in containers.

Running apps in the traditional way (without containers) means your whole machine needs to have the right version of PostgreSQL or Node for example, which may not seem like a big deal, but fast forward 6 months when you’ve not worked on “that app” in forever and you try to npm install and npm start, only to find errors stacking up in your terminal. It might be that you updated your OS, global Node version, NPM version, global Postgres version or anything, and your app is no longer compatible.

Docker helps alleviate this problem (any more) by allowing your app to be run in a container. A Docker container is a loosely coupled environment which is self-contained, and running specific versions of software. This software can be:

- Web servers (Apache, Nginx etc.)

- App frameworks (Node, Laravel, WordPress etc.)

- Databases (PostgreSQL, MySQL, MongoDB etc.)

- Even programming languages (Javascript, PHP, Golang etc.)

Your application source code and any number/combination of software can be ran in a container, allowing you to get up and running very quickly. This kind of set up also means your application can be developed in a consistent environment, deployed to its production environment in a more robust way, as well as allowing the application to be tested in an isolated way.

Using Docker - The Hello World

I normally don’t like pushing “hello world” type examples of learning as I feel they make something novel, and they don’t show the nuances of a certain technology. However, with Docker I’m making an exception. Docker has a “hello world” example which is great to help illustrate what Docker does, and how to use the basics.

To get started, head over to the Docker website where you can find installation instructions for Windows or Mac. Follow those instructions and install Docker.

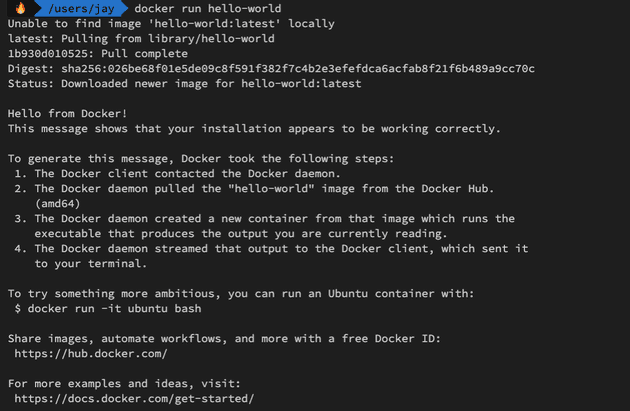

Here, you’ll also find the “hello world” example for how to use Docker. Go ahead and run the command docker run hello-world. You should see output like the following if Docker is installed successfully:

Firstly, this command tells Docker to look on your local host machine for the hello-world image. If you’ve just installed Docker you’ll have no images on your machine yet. As hello-world is not installed, Docker will look for this on the Docker Hub image registry. Docker then creates a new container from the image it’s just pulled, and runs the contents of the container which shows the output on the screen in the above image.

Some keywords here and in the response screenshot above can be elaborated:

- Docker Client - this is the CLI interface which you as a developer/engineer use. This communicates with the Docker Daemon.

- Docker Daemon - this does the heavy lifting with Docker, and is responsible for performing the actions it receives from the client - i. e. pulling an image from a registry.

- Host machine - this is commonly used to refer to the machine which your Docker installation is running on.

- Image - this is similar to an image like an . ISO file from back in the day which is essentially your application. The hello-world example is its own image which Docker pulled down to your machine. It’s like a snapshot of an application or service at a moment in time.

- Container - this is an instance of an image which is running on the host machine.

- Docker Hub - a registry of pretty much any image you can imagine, all listed on an official Docker powered platform. You can also host your own images on Docker Hub, but I’ll come on to that later.

To help understand how Docker works, I think it’s best to jump right in and perform routine commands on your Docker installation to view, delete, retrieve (etc. ) images and containers using the CLI.

Maintaining Docker images using the CLI

You’ve just ran the hello-world example above, and have downloaded the hello world image. From here, you can list, remove, run, and generally manage the images. All commands begin with docker, similar to many other linux based CLI tools. Here are a few basic commands grouped together:

docker run [image name]- as mentioned above, this runs an image if you have it locally, otherwise it searches Docker Hub, downloads, and then runs the image.docker pull [image name]- this will pull the image, but not run it. This is useful to pull images down which you may later want to use. More on that later!docker images- shows a list of images on your machine.docker ps -a- shows a list of containers on your machine (both running and exited instances of the images).docker stop [container id]- stops a container from running.docker rm [container id]- remove a container from your machine.docker rmi [image id]- remove an image from your machine (containers must be stopped and removed for this to run successfully).docker exec -it [container id] /bin/bash- executes bash in the container for debugging or general maintenance.docker logs [container id]- logs the output of the container.

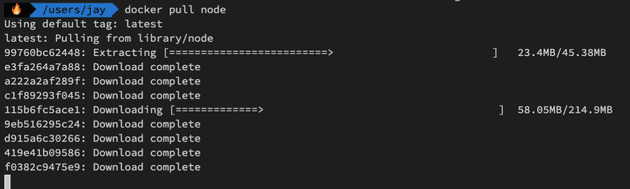

To show all these in use, we’ll download the Node image and perform operations on it. The commands will become second nature in no time if you practice with a few images from Docker Hub.

First, pull down the Node image from Docker Hub with docker pull node. This will begin downloading the Node image:

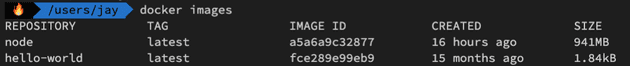

Once downloaded, we can view the list of images on our host machine so far. If you’ve followed the steps above, this will be the hello-world and node images:

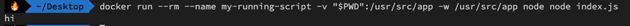

Now we have the Node image, the next step is to run the image. Most images, especially the most popular ones, have instructions on how to run the image. It’s often not as simple as running docker run node because there’s likely to be additional options which are required to run the image. In the case of the Node image, the GitHub readme contains everything you need. Here’s the command:

docker run -it --rm --name my-running-script -v "$PWD":/usr/src/app -w /usr/src/app node node index.jsTo break that down, the -it option is short for --interactive + --tty which shows you want to run the image in interactive mode, allowing you to enter the contain when it opens. The --rm option tells the container to automatically remove itself once it has ran. The --name option allows you to set the name for the container - this makes it easier to call operations on it later, so in this example we are calling the container “my-running-script”. The last part of that command is index.js which is the file you want Node to run inside the container. The other parts of the command in between is setting the working directory of the app.

To run this, try creating the index.js file in your current directory, and add a simple console log to the file, and run the above command to view the results:

The image which we pulled from Docker Hub has now been ran in a container, and the output added to the screen. We could have ran this without Node installed on our machine! That’s the beauty of Docker.

The above run command shows a very simple implementation of running straight from a public container. Most of the time though, you’ll be using Docker for more complex situations with custom settings, and this is where a Dockerfile comes in. We’ll cover that later.

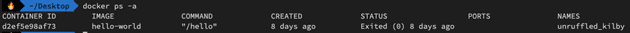

So far we’ve ran the Node image in a container. Go ahead and run docker ps -a. This lists all the containers which are running, or have previously ran, which we’ve created from our images:

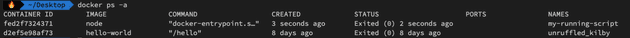

You’ll see the hello-world container is there, but not the Node container. This is because we ran the Node run command with the -rm option. Go ahead and run the same above Node run command again, but omit the -rm. You should still see your console log, but then if you run docker ps -a again, you’ll see the container:

The status of both our containers is “Exited (0)…” . If the container was still running, say for example, if we were running an Apache server, we can run a command to stop the container from running: docker stop [container id].

The container id can be either the full name of the container, in my case “my-running-script”. Or, you can also use the first three characters of the container id (or full container id). You can also chain together multiple container id’s, such as

docker stop 3er 53e 55r.

We must run the stop command in order to remove the container from our host machine. Why would we want to stop and remove a container? It takes up valuable host machine resources of course! Remove the container with docker rm [container id] .

It’s worth pointing out, that now the container is removed, the image is still present on your machine. The images aren’t running until they are ran in containers, so you can have as many images on your machine as you like within reason - disk space permitting.

So feel free to remove the actual image with docker rmi [container id].

With fundamental commands covered, the next logical step is to cover creating your own images using Dockerfiles.

Creating your own image to run and share with others

The end goal at this point is to be able to easily share an image of your app with someone else, who should then be able to easily run your application in a container. This means your image must contain everything which is required to run your app, such as NPM or Yarn dependencies. In order to make Docker really useful, you don’t want to be running long, complex looking commands like we did earlier with the Node command.

A quick prerequisite for this section - in order to share our image we’ll upload to Docker Hub. If you’ve not done so already, head over to the Docker Hub site and create an account.

Creating a Dockerfile

That’s where Dockerfiles come in. A Dockerfile is a file which specifies what will be in you app container, and how it will run by others according to a specific environment which you set. It allows us to do things like run build commands in your app, update the file system, or anything else you may want to do in order to get your app built and running.

Let’s say you have a simple Node or React project which has a package.json file listing dependencies. This is a perfect kind of project to write a simple Dockerfile for. Here’s what the Dockerfile could look like:

# Base image to run on Node V10

FROM node:10

# Create app directory

WORKDIR /usr/src/app

# Install app dependencies

COPY package*.json ./

RUN npm install

# Bundle app source

COPY . .

# Expose the port running inside the container

EXPOSE 1138

# Execute the command to run your application when the image is ran

CMD [ "npm", "start" ]A quick breakdown of what’s happening - first the Dockerfile is set to run from a base image which is node:10. Most Docker images are ran from base images, as each of your images represents one whole part of your application.

Our application here runs with Node, but you may also have a database which your app connects to, and that database could have its own image where the base would be Postgres for example. But this will be covered later.

Then, the WORKDIR is set - this is the area within your container where you want the rest of the commands to run. Remember, each container has it’s own file system which is completely separate from your host machine.

We then COPY the package.json and package.lock.json files over from your host machine to the container, and run npm install within the container. This is because we want our containerized app to contain all the built assets of our application so it can be ran as soon as someone has our image.

Now our image has our package.json dependencies installed, we want to COPY over the rest of our source code of our application. These will be the meat and potatoes of our app which we’ve likely written ourselves - such as the entry file (index.js, server.js or something), and any functions or classes we may have written.

The above commands show how our image is created when we build, but we also need to define how our container is ran when us (or others) run the container. This is where EXPOSE and CMD come in.

We EXPOSE a port to the host machine. Running a React or Node app will typically involve running on a port on localhost. Our host machine doesn’t have access to all ports or processes running on our container, so we must expose a specific port. The port we expose here will be the port our app runs on (more on this below).

Finally, the CMD line shows what will be executed when our container is ran. For us, it’s simple npm start (which will be defined in our package.json file).

Creating a .dockerignore file

Before we build our app, we must also create a file to stop our node_modules and other files which are not needed, from being packaged in to our image. The .dockerignore file goes in the same directory as our Dockerfile - usually the top directory of our app. This could contain:

node_modules

npm-debug.log

Dockerfile

.dockerignoreBuilding your image

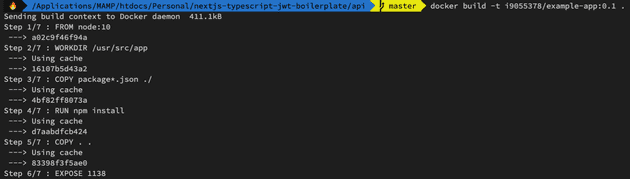

Now we have everything set up, you can cd to the location of your Dockerfile, and run the build command:

docker build -t your-docker-username/your-app-name:0.1 .This command will run through the steps outlined in the Dockerfile, and create your image. The part of the command which is -t your-docker-username/your-app-name:0.1 shows that this is our image tag. The tag can be anything, but it’s best practice to have your Docker username which you get when you create your Docker Hub account, followed by your app name, and then suffixed with your version number, in this case is :0.1 .

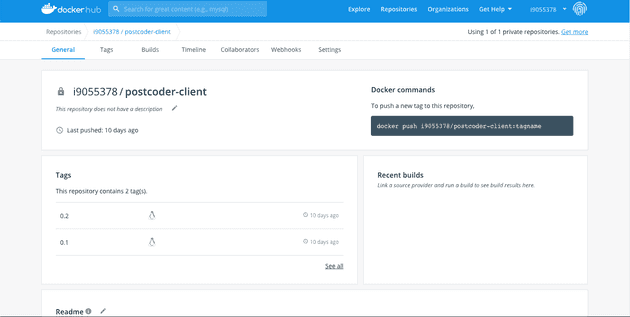

To illustrate how the tags work and why they are important, here’s a project of mine on Docker Hub which has a couple of tags:

You can see there’s multiple versions of images which can be reviewed on Docker Hub, and also pulled down and ran for reference.

Back to the building of our image - once the docker build command is ran, you should see output similar to the below:

This shows our image being built. Once complete, you can run docker images to see the image which is now sitting alongside the hello-world image from earlier. All images on your host machine will be visible here, including your own images, which are prefixed with your Docker username.

Running your image

Now we have your image built, you could technically delete Node and NPM completely from your host machine, and run this image inside the container. Not much point doing that, but you could!

To run the image, simply run:

docker run --name simple-app -p 6000:1138 -d your-docker-username/your-app-name:0.1A few things to note here:

- The above command can be run from any directory on your host machine - it doesn’t need to be next to your Dockerfile, as we’re running the already built image which exists within Docker.

- The

--nameoption allows us to set a short name which can be referenced when stopping or removing the container. - The

-poption is to set up port forwarding. Earlier when we used thedocker buildcommand to build our image, we set up our image to expose port 1130 (the port which our app runs on within the container). The port forwarding maps this exposed port 1138 to port 6000 on our host machine. - Finally the

-doption runs the container in the background in “detached” mode. Without this, we’d see the output of the container when it starts up which can get annoying. We can always rundocker logs [container id]to see logs from any container.

As we did earlier on in the post, we can use docker commands to see the container in action by running docker ps -a :

Sharing your image

Now we’ve built our image, and ran it as a container on our host machine, we can share it with other people. Publishing Docker images is really easy with Docker Hub.

First, run the following command to log in to Docker on the CLI:

docker login -u [your docker username]Once logged in, run the following command:

docker push your-docker-username/your-app-name:0.1This will push the image up to your Docker Hub account, so you can go over to the website and see it in your Docker repository!

If you accidentally delete your image, or wish to pull it down on to another machine later, you can simply run:

docker pull your-docker-username/your-app-nameAnd then the subsequent run commands mentioned earlier, to run the application again.

Updating your image

Once the image is on Docker Hub, you may want to release a new version later down the line. To do this, you’ll of course want to build out your new image which has your updates inside, and give it an incremented tag.

The image we pushed to Docker Hub earlier was your-docker-username/your-app-name:0.1, but what if we decide to update the Node base image on our app? This is something which you’ll want to do if there’s a major vulnerability in your base image, for example.

There’s two ways to approach this; creating a whole new image from an updated Dockerfile, or using docker commit.

Updating your image with an updated Dockerfile

Remember our Dockerfile:

# Base image to run on Node V10

FROM node:10

# Create app directory

WORKDIR /usr/src/app

...We are running from Node 10 here. But let’s say we wanted to update our image to run with a newer version of Node - Node V12. We simply update our Dockerfile to:

# Base image to run on Node V12

FROM node:12

# Create app directory

WORKDIR /usr/src/app

...Then create a new build, incrementing our tag from 0.1 to 0.2:

docker build -t your-docker-username/your-app-name:0.2 .Then push our image up to Docker Hub. Simple!

Updating your image with Docker commit

Let’s say you have a container of your image running already with Node V10. You can use the following command to run bash commands within your container, as mentioned at the start of the post:

docker exec -it [container id] /bin/bashOnce in the container, you can run npm install -g n to install a Node version managed, and then run n 12 to download and install the latest version of Node. Then run exit to exit your container.

You now have the container which is running with a newer version of node. You can then run:

docker commit [container id] with-node-12This is the key part - a new image is created based on the existing container. This image, with the newer version of Node, can be sent to Docker Hub with an incremented tag.

The problem with Docker commit

The commit example above is not recommended, and this is because our Dockerfile and our latest image on Docker Hub are out of sync. When we come to build our image again from our source code, we’ll be going back to Node V10. But perhaps most importantly, further down the line there’s no “one source of truth” which contains all the instructions to build your images which other people can use. This is mentioned in the Docker Docs.

So it’s generally recommended that the Dockerfile is your source of truth when it comes to keeping your Docker images easily understandable by people using them. This leads in nicely to my final point on this part of the series, which is linking your Docker Hub project tightly with your source control for your project on GitHub or GitLab (or whatever).

You may have seen many people storing their Dockerfiles in their Git repo - this makes sense as this is the one source of truth for what environment your application should be running in. As you update your app source code to use the latest version of React for example, this may require a specific version of Node, so keeping your Dockerfile updated with your repo makes perfect sense.

One great thing about this is Docker’s automated builds. Essentially this means you’re able to automatically create Docker images and push them to Docker Hub, all when you commit code to your repo, in the master branch for example. If you link your Docker Hub project back to your GitHub repo, the user sees a well-connected and tightly coupled representation of both your source code which they can review easily, as well as a quick way to pull your project down and run it instantly.

In the next post…

In this post we’ve covered what Docker is, the basics on how to build and run Docker images, as well as pushing them to Docker Hub to be used by anyone. But there’s a few things which really make great use of Docker which I’ll cover in the next post, and that’s using Docker as a development environment, running multiple containers together, and deploying docker to your server.

Thanks for reading!

Senior Engineer at Haven