Creating Flighty

March 18, 2020

Update 23/11/21 - I have since taken the project down from it’s production environment, but leaving this post here for documentation purposes.

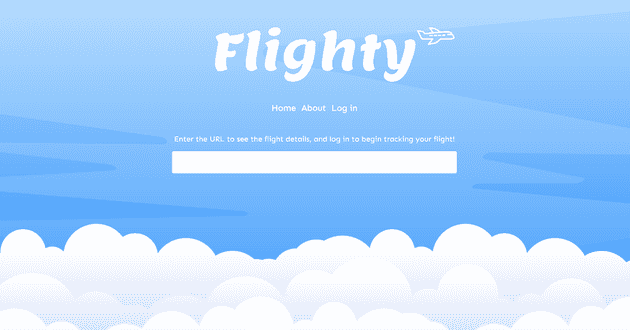

This short post documents my approach to developing a simple web application to scrape a flight booking website and store flight prices for comparison over time. I’ll be explaining a few of the features of the application, the method and technologies used, along with the coding practices.

Note - scraping websites is often prohibited by the website’s terms of use policies. I’m therefore not going to show scraping of specific websites, but rather a general approach which can be altered to use on any website.

The aim

The aim of the project is simple. I want the user to be able enter the URL of a flight search, and have the application return the flight times and prices. Luckily, the majority of flight search websites use URL query parameters to get the user’s search information, so the hard work can be done by our application back end.

The user will be able to see the times and prices matching their query, and be given the option to set up alerts to update them of price changes. This involves storing the results of each scrape in our database, and running periodic checks at the same URL to observe changes in the prices.

It’s worth pointing out that there are alternatives to this type of system widely available such as Jack’s Flight Club (thanks, Matt B), Sky Scanner etc., but this approach is mainly used to demonstrate the coding practices behind web scraping and using different integrations together.

The Flighty app can be found here!

The stack

The application uses a Node JS back end to handle API requests, with Puppeteer to scrape the sites. Notifications are sent using integrations - Twilio for text messaging and Mailgun for sending the emails.

The front end of the application is a server-side rendered React application using Next. JS framework. Everything is written in TypeScript.

I’ll be providing relevant code snippets in this post to explain top level code structure, but the post won’t include boilerplate/setup code. To view the projects on Github, head over to the client side app, and server side app.

Parsing the flight URL

First, we’ll create our Express endpoint to take the user provided URL which can be parsed to retrieve data. As explained earlier, I want the user to have instant feedback when they paste the URL in to the input field as it provides a nice little UX treat, so being able to parse the URL instantly is important.

// src/api/v1/scrape.ts

import { Router } from 'express';

const router = Router();

import { Scraper } from '../services/Scraper';

import { UrlParser } from '../services/UrlParser';

router.post('/parse-url', (req, res) => {

const theUrl = req.body.theUrl;

const urlParser = new UrlParser(theUrl);

const parsed = urlParser.parse();

res.send({

website: urlParser.website,

filters: parsed

});

});

...

This parse-url endpoint uses a UrlParser class to do the heavy lifting, encapsulating the validation and functionality required to parse the URL. This endpoint can be tested using programs like Postman by going to your URL like: ’http://localhost:3000/v1/scrape/parse-url‘.

The actual parsing of the URL is handled in the UrlParser class:

// src/api/services/UrlParser.ts

require('dotenv').config();

import * as _ from 'lodash';

import * as url from 'url';

class UrlParser {

public website: string;

public parsedUrl: IFlightDetails | null;

private url: string;

constructor(theUrl: string) {

this.url = theUrl;

if (this.url) {

this.determineWebsite();

}

}

public parseUrl() {

const parsed = url.parse(this.url, true).query;

if (this.website === process.env.WEBSITE_TO_SCRAPE) {

this.parsedUrl = this.parsedWebsite(parsed);

} else {

this.parsedUrl = null;

}

}

private determineWebsite() {

const websiteToScrape = process.env.WEBSITE_TO_SCRAPE;

if (websiteToScrape && this.url.includes(websiteToScrape)) {

this.website = websiteToScrape;

} else {

this.website = 'unknown';

}

}

private parsedWebsite(parsed: any) {

return {

adults: parsed.adults,

teens: parsed.teens,

children: parsed.children,

dateOut: parsed.dateOut,

dateIn: parsed.dateIn,

origin: parsed.originIata,

destination: parsed.destinationIata

};

}

}

export { UrlParser };

The constructor function first checks that the URL is not empty before parsing. The constructor function is also responsible for determining what website the URL is for. This is required so the class can run the relevant web scraping functionality based on the website we’re scraping. As web scraping is based on DOM elements, one website’s data will be accessed differently to another, so it’s important we know what code to execute.

Once the class is instantiated, the object is used to perform the URL parse with:

urlParser.parse();

This calls the parsedWebsite() method, which constructs a JSON object of the parameters in the URL, and returns it back to the Express API call, and then back to the user.

This is a very simple way of extracting the information from the URL, but will of course only work if the URL has query parameters matching “adults” and “teens” for example. This will likely change depending on what flight website you’re looking at.

Scraping the site

The next part of the server side API is to parse the web page on the user’s request. This will be a request to a second endpoint:

// src/api/v1/scrape.ts

...

router.post('/go', async (req, res) => {

const theUrl = req.body.theUrl;

try {

const scraper = new Scraper(theUrl);

scraper.parseUrl();

const flightResults = await scraper.scrape();

if (!scraper.parsedUrl) {

throw new Error('Parse error');

}

const flightProcessor = new FlightProcessor();

// crate flight object and save flight details, NOT the results of the scrape

// as the results of the scrape will e updated each time the site is scraped for

// this particular flight information

const inAndOutFlights = await flightProcessor.saveFlightsFromScrapedUrl(

scraper.parsedUrl

);

await Promise.all(

inAndOutFlights.map(async (flightDetails, i) => {

if (!flightDetails.flightDate) {

return false;

}

const flight = new Flight(flightDetails.id);

await flight.assignFlightToUser(req.thisUser.id);

await flight.saveResultsFromScrape(flightResults, i);

return flight;

})

);

res.send({

success: true,

website: scraper.website,

filters: scraper.parsedUrl,

scrapedData: flightResults,

flightIds: inAndOutFlights

});

} catch (err) {

return errors.errorHandler(res, err.message, null);

}

});

This go endpoint uses a Scraper class, extendng the UrlParser class, to perform the scraping of the site. The Scraper class is responsible for the scraping of the site’s DOM elements, formatting and validating the data, and returning the data we need to return to the user (and also store in the database). I won’t go in to detail about how the site is scraped at it’s self explanatory from the source code over on Github.

Once the site is scraped and we have the flightResults , the FlightProcessor class is used to save the flights to the database. We want to be able to save basic flight details so the same flight can be updated with it’s flight results each time the URL is parsed. Also, multiple people might be watching the same flight, so it makes sense to have one reference point of the flight to keep the database normalised. The database will contain the following flight information:

id, origin, destination, datetime

The variable inAndOutFlights is the data for the flight in both directions. If the user enters the flight URL for a one way flight, this will be a 1 element array, for example.

This array of basic flight information for the outgoing and return flight is then mapped, with each flight direction creating a new Flight class instance. Again, I won’t go in to detail about the Flight class as the code is all on Github, but this class assigns the flight to the user, and saves the scraped flight results.

The flight is assigned to the user by storing a flight-user reference in the user_flights DB table. This contains the following info:

id, userId, flightId, alertSet

Saving the flight to the user ensures the database is normalised, allowing us to keep the database DRY.

Finally, the flight results are saved in another database tabled called flight_results , and stores the following info:

id, flightId, price, date

Again this keeps the database normalised. As we’re aiming to store updated flight results periodically every hour or every day, this structuring of database tables keeps an efficient way of storage rather than repeating specific flight details for each flight result set.

Sending alerts

The final step of events which form the base of this simple app is the alert system. I’ve initially created an email alert system which use Nodemailer to send SMTP email request to Mailgun, but the code is structured in a way to allow for additional methods of alerts in future, such as text messaging.

// src/api/v1/flight.ts

...

router.post('/send-alerts', async (req, res) => {

try {

const userFlights = new FlightAlertProcessor();

const userAlertFlights = await userFlights.getUserAlertFlights();

try {

// TODO: send alerts using queue system

await userAlertFlights.forEach(async (alertFlight: IUserFlight) => {

const flightAlert = new FlightAlert();

flightAlert.processFlightAlert(alertFlight);

});

} catch (err) {

return errors.errorHandler(res, err.message, null);

}

res.send({

success: true

});

} catch (err) {

return errors.errorHandler(res, err.message, null);

}

});

router.use(verifyToken());

router.post('/create-alert', (req, res) => {

const flightIds = req.body.flightIds;

try {

flightIds.map(async (flightId: IFlightResults) => {

if (!flightId.id) {

return null;

}

const flight = new FlightAlert();

return await flight.setAlert(flightId.id, req.thisUser.id);

});

res.send({

success: true

});

} catch (err) {

return errors.errorHandler(res, err.message, null);

}

});

The router.post('/create-alert') route takes in the request for a user to mark their flight in alert mode - adding “TRUE” to the alert column for the users flight. Of course, this is only done for the flight which is saved specific to the user.

This route is below the router.use(verifyToken()) route middleware, which helps secure the application from unauthorized requests.

Finally, the router.post('/send-alerts') route is above the auth middleware, so can be accessed by anyone. This does not return or expose any information, but instead initiates a series of actions on the server, inside the processFlightAlert() method, which are to:

- Loop through each user flight and check the original URL for updated information

- Save the latest flight price in to our database in a new entry

- Compare previous entry and the latest one we just saved

- Send the user an email with the information on the price change

Database structures

I’ve touched in database structure above, but for more info on the database table structures, head over to the Github repo and the DB migrations area.

Thanks for reading

I hope this was helpful, thanks for reading :)

Senior Engineer at Haven