My TypeScript, Fastify and Prisma starter project wrapped with Docker

May 08, 2022

The starter repo discussed in this post can be found here.

Benefits of the tech used for this starter project

TypeScript has always been a favourite of mine. With optional static typing and the ability to use neat ways to organise code in to classes with explicitly defined public/private methods, it helps the DX of any project. It can be sprinkled in to a project to help with the more complex object structures only, or can be used religiously for every aspect of the project, the choice is yours.

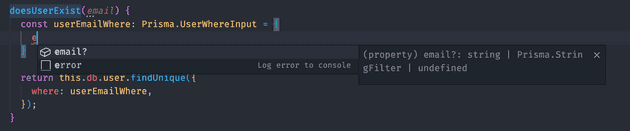

Once installed, TypeScript works perfectly alongside the database ORM Prisma, to assist in auto generating TS types, removing the need to laboriously create the types manually after already creating the object structure for Prisma itself. Here’s an example of how this works, getting a list of types automatically when writing out a query in Prisma:

A previous starter project of mine used the Sequelize ORM alongside TypeScript, but was extremely difficult to get types working with the data modelling provided by Sequelize because of the lack of baked in support. Prisma is the answer to that problem.

The above are pulled together to create a lightning fast Node.js based API using the Fastify framework. If you’ve not used Fastify before, it’s similar to Node’s popular Express framework, but with a simplified setup, superior performance, and a load of other neat features which make the DX that little bit better.

Finally wrapping this all together nicely is Docker, which I prefer to use in every project I can in order to limit the number of packages installed on my MacBook globally. I don’t like the idea of managing a version of Postgres on my host machine, for example, so use a simple few lines in a docker compose file to sort all that boilerplate setup out for me. Docker also means other people using the project have a much easier time getting stuck in rather than worrying about incompatibility issues during setup.

The whole starter project can be found here, but the rest of this post will go through setup and a couple of challenges I faced along the way.

Getting started - installing TypeScript

To start with, we’ll run npm init to generate the package.json file. Then install the dev dependencies needed:

npm install typescript ts-node-dev @types/node -DThen add the project entry .ts file, index.ts. I add my source files to a src directory, so I’m left with the following project structure:

- root

- node_modules

- src

- index.ts

- package.json

- package-lock.jsonDepending on how TypeScript is set up, you may want to use a configuration file to tell the TS compiler how to run, so here’s a tsconfig.json file (in the project root) I use to get the setup I want:

{

"compilerOptions": {

"module": "commonjs",

"target": "ESNext",

"moduleResolution": "node",

"skipLibCheck": true,

"sourceMap": true,

"outDir": "build",

"forceConsistentCasingInFileNames": true,

"noImplicitAny": false,

"strict": true

}

}Finally, update the package.json file to include run scripts to start the project, leaving you with the following file:

{

...

"scripts": {

"dev": "ts-node-dev --exit-child ./src/index.ts",

"start": "tsc && node ./build/index.js",

},

"devDependencies": {

"@types/node": "^17.0.31",

"ts-node-dev": "^1.1.8",

"typescript": "^4.6.4"

}

}Running TypeScript with tsc vs ts-node

TypeScript can be integrated into a project in many ways, using a number of packages. For example, Babel can be used to compile TypeScript without even having TypeScript installed on the project (although it does not have all of the type benefits that come with using actual TypeScript). TypeScript can also be compiled on the front end as part of Webpack, by using the Webpack plugin ts-loader as described in full here.

In this starter project though, I’m opting for using a little lower level approach. For running in production (the start script in the package.json), I’m using the raw TypeScript tsc command to transpile the .ts files to Javascript .js files. The tsc command can be used in many ways, as described here, and I like to use the most flexible, easier to use option which is running tsc alone, using the tsconfig.json to define the options for the compiler. As instructed by my config file, running tsc will look for the .ts files and compile them to the /build directory. Once compiled, the start script then runs the node ./build/index.js command.

For development (the dev script in the package.json file), I’m using the package ts-node-dev. Unlike the traditional way of transpiling to a JS output, the ts-node-dev package takes a root .ts file as the input argument, and traverses this file to all other linked .ts files and runs the application with it’s TypeScript execution engine. It “JIT transforms TypeScript into JavaScript, enabling you to directly execute TypeScript on Node.js without precompiling”. This is great for development as it listens to file changes out of the box, and is more performant to work with with than constantly compiling the whole project every time. This provides a noticeable difference, especially for large projects.

To test the TypeScript integration is working so far, add a console.log to the index.ts file and run the dev and start commands to see the output.

Installing Fastify and starting a basic server

Once the base TypeScript project is set up, Fastify can be integrated with:

npm install fastifyOnce installed, the index.ts file can be updated to start our server:

// index.ts

import fastify from "fastify"

const server = fastify()

server.get("/", function (request, reply) {

reply.send({ hello: "world" })

})

server.listen({ port: 8080, host: "0.0.0.0" }, (err, address) => {

if (err) {

console.error(err)

process.exit(1)

}

console.log(`Server listening at ${address}`)

})Note: it’s critical that the

hostis set to0.0.0.0when setting up the server, because we are later going to run from inside a docker container. By default, Fastify listens on port127.0.0.1, but as we’ll later be accessing from outside the container, we need to update to listen on all IPs.

Setting up Prisma - installation and schema (part 1)

Prisma comes in a few different parts - notably the “Prisma Client” and “Prisma Migrate”. Prisma Client provides the functionality for us to query the database with create and find commands, for example. Prisma Migrate provides a way for us to keep the database schema file in sync with the actual database, performing migrations and other related actions.

To start with, run:

npm install prismaThis installs the Prisma CLI tool, and the Prisma Migrate functionality. Once installed, we can run the following command to initiate Prisma in our project:

npx prisma initThe above command creates the prisma directory in our project, which contains the important schema.prisma file. This schema file will eventually contain the database modelling for each table, including types, and the table relations. As this post is really only about the setup of the technologies working together, just include any model you like, such as a User model:

// ./prisma/schema.prisma

generator client {

provider = "prisma-client-js"

}

datasource db {

provider = "postgresql"

url = env("DATABASE_URL")

}

model User {

id Int @id @default(autoincrement())

firstName String?

lastName String?

}The npx prisma init command also created a .env file in the project root, containing a placeholder DATABASE_URL value. We need to fill this in, but don’t have a running database yet. To get this database setup, we’re going to use Docker.

Setting up Docker with docker compose

As well as using Docker to contain our Postgres database, we’ll also use it to hold the Node.js API, keeping everything together in one place just feels right.

Start by creating the docker-compose.yml file:

version: "3.8"

services:

ts-starter-api:

platform: linux/x86_64 # Critical so Prisma runs correctly on a M1 Mac

build: ./

environment:

NODE_ENV: development

ports:

- 8080:8080

- 9240:9240 # Node inspect

volumes:

- ./:/home/app/api

- /home/app/api/node_modules

working_dir: /home/app/api

restart: on-failure

depends_on:

- ts-starter-db

- redis

ts-starter-db:

image: postgres

environment:

- POSTGRES_USER=user

- POSTGRES_DB=ts-starter

- POSTGRES_PASSWORD=password

- POSTGRES_HOST_AUTH_METHOD=trust

- PGDATA=/var/lib/postgresql/data/pgdata # Critical for Postgres to work in the container

volumes:

- ./db/data/postgres:/var/lib/postgresql

ports:

- 5432:5432The docker-compose.yml file orchestrates the different parts of the application so they can be installed together on the same network, allowing containers to communicate between each other. The two containers we’re creating are ts-starter-api and ts-starter-db.

Starting from the top of the file, I’ll go in to some of the notable parts of the file:

- First, the

platform: linux/x86_64option is critical when running on a machine with an M1 mac chip. Without this line, M1 machines will run the native arm64 container, which is not compatible with the Prisma binaries. - The

build: ./option tells docker compose to build based on theDockerfile(more on that next!). - The

portsallow the host machine to access the ports in a container by a sort of port forwarding. Relating the ports to the same number is usually the done thing to keep it simple. - The

PGDATA=/var/lib/postgresql/data/pgdataenvironment variable is needed to get the database working within Docker compose.

Configuring the Dockerfile

The docker-compose.yml file will be useless without the Dockerfile. The Dockerfile is used alongside the compose file to handle the creation of the Docker image:

FROM node:16.15.0-alpine

WORKDIR /home/app/api

COPY prisma ./prisma/

COPY package*.json ./

RUN npm install

COPY . .

RUN npm run prisma:generate

CMD ["npm", "run", "dev"]The Dockerfile installs node:16.15.0-alpine as a base image. The Prisma files, package.json files, and project files are copied over from host to image with the COPY command, building the image as we want. The dependencies are installed on the image with RUN npm install, and Prisma files are generated with RUN npm run prisma:generate (more on that later). Finally, npm run dev is the last action, starting the server with the scrip from package.json.

Setting up Prisma - migration and querying (part 2)

Now that Docker is set up, we can circle back to Prisma and complete our schema.prisma file. Our file needs one remaining element - the database URL.

The database URL consists of credentials we set up in the docker compose file earlier in the format of:

DATABASE_URL="postgresql://[user]:[password]@[host]:[port]/[name]?schema=public"… which given our values in the docker file from above translates to:

DATABASE_URL="postgresql://user:password@ts-starter-db:5432/ts-starter?schema=public"The host outside of docker would almost always be

localhost, but when using Docker this would be the host as recognised in a docker container environment, which is the service name of the container -ts-starter-db.

The database URL needs to replace the placeholder value in the .env file created by Prisma earlier.

With the database URL in place, the schema.prisma file should look like this still:

// ./prisma/schema.prisma

generator client {

provider = "prisma-client-js"

}

datasource db {

provider = "postgresql"

url = env("DATABASE_URL")

}

model User {

id Int @id @default(autoincrement())

firstName String?

lastName String?

}At this point, we’ve previously ran installed the Prisma CLI and ran npx prisma init to create our schema file, but there’s been no actual database activity yet - i.e. no migrations have happened, no tables created, no queries… queried.

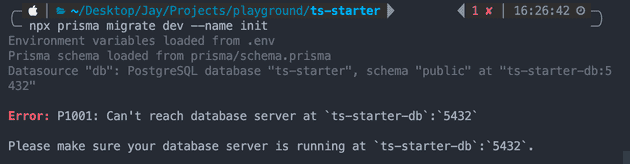

To get started using the database, we must first create a migration file (based on the model we’ve created) and run the migration. To do this, we need to use the CLI inside the docker container otherwise we get this error:

This error happens because the environment variable for the database URL points to a docker container host name, which is only accessible from within another docker container. So the actual command to bash in to the container would be:

docker exec -it [container-name] sh # my container name was "ts-starter-ts-starter-api-1"Once in the container we can initiate the first migration:

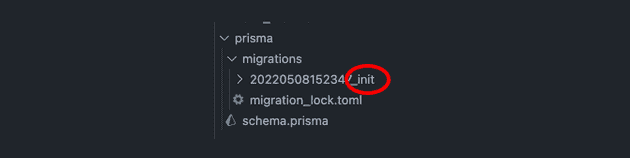

npx prisma migrate dev --name initA couple of things worth pointing out here - firstly npx prisma migrate dev is appended with the keyword dev because it’s “a development command and should never be used in a production environment” as described in the docs. So it’s kind of great they have made it clear like that, compared to Sequelize which is the wild west of naming conventions and migration management.

Secondly, the --name init option is great for naming each migration. Naming migration files is a standard practice, and helps other devs sift through what could end up with hundreds of different migrations over time. Our initial migration here is aptly called “init”, but it’s good to name things based on the migration, such as “added_posts_table” or “removed_date_column_from_tags”.

Once the above command has finished, you can connect to your fancy new database and see the new User table in all it’s glory.

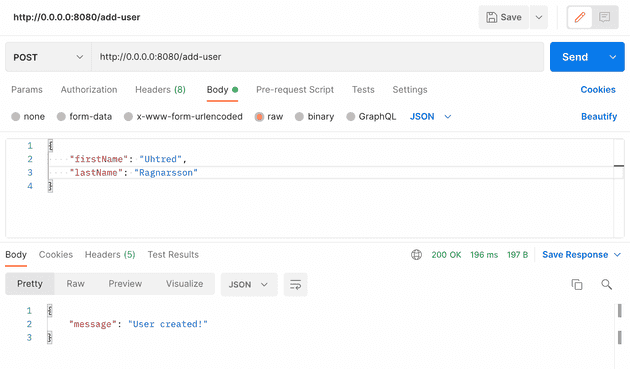

Querying a Prisma database from within a docker container

The final step with setting up Prisma is to install the Prisma Client, and create our first query. First, install the client:

npm install @prisma/clientWith the Prisma Client installed, we can create our first query:

// index.ts

import fastify from "fastify"

import { PrismaClient } from "@prisma/client"

const server = fastify()

const prisma = new PrismaClient()

interface UserType {

firstName: string

lastName: string

}

interface ResponseType {

message: string

}

async function addUser({ firstName, lastName }) {

await prisma.user.create({

data: {

firstName,

lastName,

},

})

}

server.post<{ Body: UserType; Reply: ResponseType }>(

"/add-user",

function (request, reply) {

const { firstName, lastName } = request.body

addUser({ firstName, lastName })

.catch((e) => {

return reply

.code(500)

.send({ message: "There was a problem adding the user." })

})

.finally(async () => {

reply.code(200).send({ message: "User created!" })

await prisma.$disconnect()

})

}

)

server.listen({ port: 8080, host: "0.0.0.0" }, (err, address) => {

if (err) {

console.error(err)

process.exit(1)

}

console.log(`Server listening at ${address}`)

})There’a a lot to unpack in that one snippet, and the starter repo for this post it’s not all in one file, as that would be terrible for a production ready application. But for the purposes of seeing how all these parts fit together, it’s worth seeing in one place.

- Starting off at the top,

fastifyandPrismaClientare imported from our packages and assigned const variables. - The

UserTypeandResponseTypeinterfaces are created for use with Fastify’s route typing. Without defining types, TypeScript will error (depending on config settings), so it’s worth getting in to the habit of doing it. - The

addUserfunction is defined, which uses the Prisma Client API to write a nice readable query. - The

server.post<{ Body: UserType; Reply: ResponseType }>attaches typings to the Fastify route using generics as described in their refreshingly detailed docs. - Finally with any other Node.js framework route, data is extracted from the request object and passed to a function. Fastify’s

replyis used to send a response back with relevant https status code depending on the success of the query, and Prisma is disconnected.

Be sure to use “prisma generate” with Docker

In my project starter I have added the following script:

{

...

"scripts": {

...

"prisma:generate": "prisma generate",

...

}

}The prisma generate command reads the schema file, and updates relevant “under the hood” files in the Prisma Client, as described in the docs with a neat little diagram. This generate command is ran automatically when we first installed the Prisma Client earlier, but is also needed when creating our docker image, which is why the following line is in the Dockerfile from earlier:

# Dockerfile

...

RUN npm run prisma:generate

...This ensures that when the project is copied over to the image, the relevant generate command also generates the files needed by the application running inside the docker container.

This is also important to note because when changes are needed to the database. Let’s say we wanted to add a new column for middleName, the following actions need to be taken:

- Make schema change to add

middleNamecolumn to theUsermodel - Bash into the container with

docker exec -it [container] sh - Run

npx prisma migrate dev --name add_middle_name - Run

npm run prisma:generateto reflect schema changes to the Client API (can also be achieved by re-building the docker container)

A side note

When working with Docker I sometimes like to edit files in vim or nano, which don’t come out of the box with most Docker base images. This starter project uses node:16.15.0-alpine as the base image, and Alpine Linux uses apk to install packages. Here’s the full Dockerfile with vim and nano installed:

FROM node:16.15.0-alpine

RUN apk update

RUN apk add vim nano

WORKDIR /home/app/api

COPY prisma ./prisma/

COPY package*.json ./

RUN npm install

COPY . .

RUN npm run prisma:generate

CMD ["npm", "run", "dev"]For Ubuntu based images,

apkshould be replaced withapt-get.

Thanks for reading

Getting each of these parts of an API are not hard to get working in isolation, but when combining together, especially when running on a Mac M1 chip, it can be very painful to see random errors which are hard to debug, so hopefully this has helped out.

Senior Engineer at Haven