Long running, asynchronous server side processes in Node.js

September 13, 2020

This post lists various attempts I made at reducing the time of a long running server side processes in Node.js. The majority of server requests of many web sites/apps take a few hundred milliseconds, but sometimes there’s a process which can take much longer. In my case, the web app takes a user uploaded file and performs computational and asynchronous actions on the data in the file, and as file size of up to 2GB can be uploaded, it’s important that the file is processed as fast and efficiently as possible.

The context

Last year I developed a feature into a web app at work which calculated which locations are within a user specified radius of a certain point. For example, we’d want the system to find all the users whose addresses are within 10 miles of a store. This was developed in to the system in PHP, but I’ve recently had the urge to develop something similar in Node.js. However, rather than it being a part of a separate system, I started making it as a standalone system to be available to anyone on the web.

Here are the steps the user would typically go through when using the system:

- User either uploads a CSV file containing postcodes or pastes a list of postcodes into the site

- User enters the origin postcode, and also the radius of their search

- If a CSV was added, the user selects which column contains the postcodes (so the server knows which column to process)

- The user clicks submit, which sends the file off to be processed by Node.js

Once the file is uploaded to the server and validated, the rows are parsed and each postcode runs through the following 2 notable processes:

- The postcode from the CSV is looked up in a database to find the latitude and longitude

- The latitude and longitide values are used to calculate the distance from the origin location the user entered when submitting the data

Processing the large data upload on the server

For the sake of this post I’ve taken out some of the validation and other unrelated code, but I’ve left in the important parts relevant to the attempts at speeding up the Node.js data processing time.

Processing with map and promise.all() - Attempt 1

As I was first developing the server side process of this app I was testing with only 50 rows in a CSV file. This helped with ease of development and testing, but was never going to be able to support large file uploads.

I needed a starting point so went with a simple map over each row. As each row needs an asynchronous database lookup to find the latitude and longitude of the postcode, each row would return a promise, so the map return each promise to be resolved in a Promise.all:

// The uploaded file has already been parsed and structured into uploadedDataArray at this point

const uploadedAddresses = uploadedDataArray

const originLocationData = objectContainingLatLngOfOriginLocation

const searchRadius = 10

const processedData = await Promise.all(

// Each time uploadedAddresses is mapped, a promise is returned to the Promise.all

uploadedAddresses.map(async (postcode) => {

// Database call, making each map iteration asynchronous

const postcodeData = await findPostcodeData(postcode)

const distance = distance(

postcodeData.latitude,

postcodeData.longitude,

originLocationData.latitude,

originLocationData.longitude

)

return {

coordinates: {

latitude: address.postcodeData.latitude,

longitude: address.postcodeData.longitude,

},

distance,

isWithinRange: distance < searchRadius ? "Yes" : "No",

}

})

)

function distance() {

// distance calculation - returns distance in miles

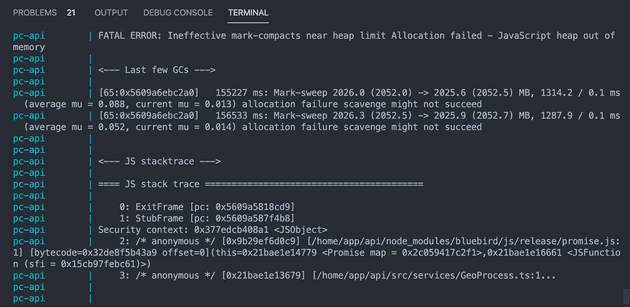

}This worked well for a few rows in the CSV, but when tested with a larger 15mb file containing 100,000 rows, Node crashed with the good old “JavaScript heap out of memory” error after a mere 15 seconds:

This is because the map is iterating over 100,000 rows very quickly (long before the database calls are finishing), adding each promise in memory trying to resolve. Each iteration is also calculating the distance of the each location to the originLocation, and although this isn’t an asynchronous process, it’s a fair chunk of mathematical processing.

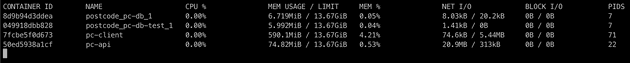

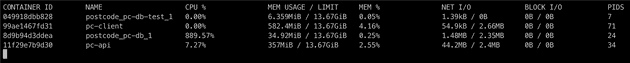

The above explanation can be observed when viewing the live stats from my Docker Compose setup, which is viewable by running docker stats. Here’s the stats before any processing:

And here’s the stats just before the “out of memory” error:

Using a for loop to process sequentially and synchronously - Attempt 2

As the above issue is likely due to the build up of unresolved promises and a load of data held in different parts of memory, my second attempt was to turn the process into a kind of synchronous process, whereby each iteration of the upload data would be processed one at a time, sequentially:

const uploadedAddresses = uploadedDataArray

const originLocationData = objectContainingLatLngOfOriginLocation

const searchRadius = 10

const processedData = []

for (let i = 0; i < uploadedAddresses.length; i++) {

const postcode = uploadedAddresses[i]

const postcodeData = await findPostcodeData(postcode)

const distance = distance(

postcodeData.latitude,

postcodeData.longitude,

originLocationData.latitude,

originLocationData.longitude

)

processedData.push({

coordinates: {

latitude: address.postcodeData.latitude,

longitude: address.postcodeData.longitude,

},

distance,

isWithinRange: distance < this.radius ? "Yes" : "No",

})

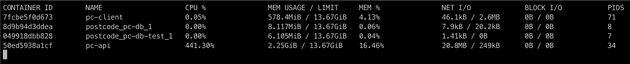

}This stops the JavaScript memory heap error, but takes way too long to carry out the process. Specifically, this attempt takes 37 seconds for every 100 rows of the CSV upload. Here’s a screen shot of the memory and CPU usage for reference:

It’s clear this needs to be improved further. I didn’t want to wait around to see how long the 100,000 rows would have taken, but to extrapolate the timings for 100 rows, it would have taken around 10.2 hours, which is of course too slow.

Running asynchronously with promise concurrency control - Attempt 3

Too many promises and database calls working in memory at once crashes the server, and just one at a time is too slow, but some sort of parallel/asynchronous processing is a good thing - that’s one of the benefits of Node! The server will easily be able to handle multiple concurrent promises and database calls working at the same time, but we want to limit that amount.

A great tool to control the number of promises resolving at any one time is Bluebird - the JavaScript promise library. I’ve only used Bluebird a few times because promises have been natively supported in Node since circa V 0.12, but Bluebird’s Promise.map feature contains an optional concurrency parameter, allowing easy control.

In this third attempt, I worked out an optimal number of concurrent processes to be running at one time which is 500:

const uploadedAddresses = uploadedDataArray

const originLocationData = objectContainingLatLngOfOriginLocation

const searchRadius = 10

const processedData = Promise.map(

uploadedAddresses,

async (postcode: any) => {

const postcodeData = await findPostcodeData(postcode)

const distance = distance(

postcodeData.latitude,

postcodeData.longitude,

originLocationData.latitude,

originLocationData.longitude

)

return {

coordinates: {

latitude: address.postcodeData.latitude,

longitude: address.postcodeData.longitude,

},

distance,

isWithinRange: distance < this.radius ? "Yes" : "No",

}

},

{ concurrency: 500 } // The magic line!

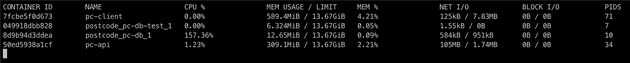

)This means only 500 promises (and therefore database calls) are processing at any one time. This attempt takes only 6 seconds for every 100 rows of the CSV uploaded, which equates to around 1.7 hours for 100,000 rows. Not ideal still by any means, but it’s at least a massive improvement.

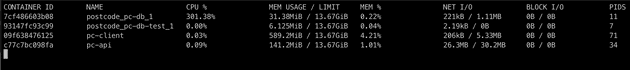

Here’s the memory and CPU usage for reference:

Running asynchronously with large chunked database requests - Attempt 4

The final attempt which I decided to run with in production is this fourth attempt. It’s clear from the previous attempts that the database calls are the bottleneck, which is evident when they are removed, because at that point 100,000 rows of the distance calculation only take a couple of seconds. The database calls are the bottleneck because each row starts a new query, which takes a (relatively) significant amount of time to setup and tear down.

This last attempt tries to address that issue by chunking the postcodes into large arrays, and processing a whole chunk of 500 postcodes at once rather than just one:

const uploadedAddresses = uploadedDataArray

const originLocationData = objectContainingLatLngOfOriginLocation

const searchRadius = 10

// Split the uploaded array in to chunks so each chunk can be queried in one go

const uploadChunks = chunkArray(uploadedAddresses, 500)

// Map over each chunk sequentially (1 concurrency), and get postcode data

const dataWithDbPostcode = await Promise.map(

uploadChunks,

async (uploadChunk: any) => {

// Query using each chunk to do an "array in" query

const dbChunk = await db.postcodes.findAll({

where: {

postcodeNoSpace: {

[db.Sequelize.Op.in]: uploadChunk

}

}

})

return dbChunk

},

{ concurrency: 1 }

)

// Flatten the chunks of uploaded and queried postcodes and get the distance

const processedData = dataWithDbPostcode.flat().map(address => {

const distance = distance(

postcodeData.latitude,

postcodeData.longitude,

originLocationData.latitude,

originLocationData.longitude

)

return {

coordinates: {

latitude: address.dbRow.latitude,

longitude: address.dbRow.longitude,

},

distance,

isWithinRange: distance < this.radius ? "Yes" : "No",

}

})

// Chunk array function

chunkArray(arr, chunk_size) {

var results = [];

while (arr.length) {

results.push(arr.splice(0, chunk_size));

}

return results;

}This solution is much better than the previous ones, taking only 0.9 seconds for every 100 rows of the CSV upload. This does still equate to about 15 minutes for every 100,000 rows, but it’s another huge improvement.

Again, here’s the CPU and memory usage of this attempt:

It’s likely that most of the uploads to a service like this won’t contain a large dataset like 100,000 rows, but a system like this should be able to account for this much data, and not just be capable of processing the information but also provide a good user experience. The user experience of a long running process like this is critical and can be approached in many ways.

Improvements

There are tonnes of ways an engineering problem like the one outlined in this post can be approached. One limitation to the system as it stands though is that the user uploaded file is still stored in memory when imported and assigned to the uploadedAddresses variable in the first line. This is bad because Node has a built in memory limit of something like 1.7GB. This limit can be raised by using --max-old-space-size flag, but as the SO answer in that link explains, the limit shouldn’t be raised too high as it could interfere with other processes on the server, or even stop the serer from running all together.

The Node.js code is also limited by the actual available server memory too, so in my case with a small t2 AWS EC2 box with 2GB, there’s not much wiggle room.

Ideally, the file will not be processed when the whole thing is loaded into memory, but instead make use of one of Node’s most useful and used features which is Streaming. This will allow the file to be processed as it is read, with the data running through the system like a… well, stream!

User experience of long running server processes

There are so many ways this problem can be approached to ensure users are well informed and feel confident in the process, but I’ll list just a few here what I consider to be good practice:

- If the process is likely to only take a minute or less, the UI should let the user know there won’t be long to wait

- If the process is likely to take longer than a minute, the user should be told that the process will continue and they will receive an email once complete, woth a link to view the finished result

- With either option, a “real” loading bar should show the progress of the Node.js process

The loading bar is in my opinion one of the most overlooked UI element, especially in situations like this. I use the term “real” loading bar to refer to a bar which increases the percentage completion in proportion to the percentage of the task which has completed - not a “dumb” loading bar which is downloaded from a create your own GIF website. Not that there’s anything wrong with a GIF loading bar, but there’s a time and a place for them I think.

There’s a great 99% Invisible podcast which explains how influencial the real loading bar was in the early days of computing when comouters were much slower than they are now, so I’d recommend listening to that if you’re ito design and/or user experience.

I like the idea of a loading bar so much that I’m going to create one for this project and use that as the subject for my next blog post!

Senior Engineer at Haven